Softmax#

Introduction#

Softmax is a multi-dimension version of sigmoid. Softmax is used when:

Used as a softer max function, as it makes the max value more pronounced in its output.

Approximating a probability distribution, because the output of softmax will never exceed \( 1 \) or get below \( 0 \).

Definition#

softmax(\( x_i \)) = \( \frac{e^{x_i}}{\sum_j e^{x_j}} \)

With temperature

softmax(\( x_i \), \( t \)) = \( \frac{e^{\frac{x_i}{t}}}{\sum_j e^{\frac{x_j}{t}}} \)

How does softmax look, and how it works in code?#

%matplotlib inline

import numpy as np

from matplotlib import pyplot as plt

def softmax(x, t = 1):

exp = np.exp(x / t)

# sums over the last axis

sum_exp = exp.sum(-1, keepdims=True)

return exp / sum_exp

Now let’s see how softmax approaches the max function

array = np.random.randn(5)

softer_max = softmax(array)

print(array)

print(softer_max)

[ 0.184181 1.69036063 0.28227309 -0.54696496 0.09917348]

[0.1248066 0.56281162 0.13766971 0.06007654 0.11463553]

See how the maximum value gets emphasized and gets a much larger share of probability. Applying weighted average would make it even clearer.

average = array.sum() / array.size

weighted = array @ softer_max

print(average)

print(weighted)

print(array.max())

0.3418046481462643

0.9917110987649045

1.690360625256905

See how the weighted average gets closer to the real maximum. To make it even closer to max, reduce the temperature.

colder_max = softmax(array, 0.1)

weighted = array @ colder_max

print(average)

print(weighted)

print(array.max())

0.3418046481462643

1.6903589162351116

1.690360625256905

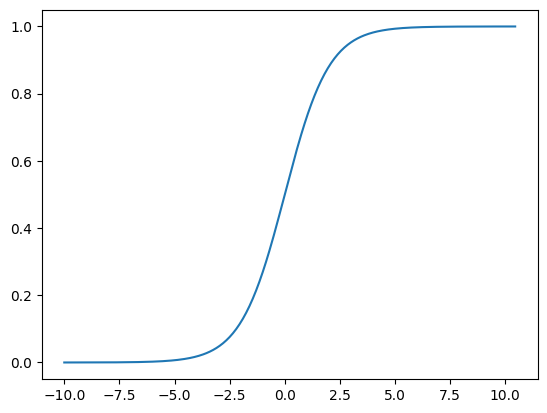

Softmax is a generalization of sigmoid. Sigmoid can be seen as softmax(\( [x, 0] \)). Plotting shows that.

x = np.zeros([410, 2])

x[:, 0] = np.arange(-200, 210) / 20

y = softmax(x)

plt.plot(x[:, 0], y[:, 0])

plt.show()